From Unit Tests to CI: A Practical Guide for Professional ROS 2 Projects

In previous posts, we explored why testing matters in real ROS 2 projects and why some nodes are harder to test than others. This final piece brings everything together.

The goal here is not to introduce new tools, but to answer a very practical question:

What does a complete, professional ROS 2 testing strategy actually look like—and how do you automate it to prevent regressions?

This is the missing guide many teams struggle to find when moving from prototypes and demos to long-lived robotic products. Not because the tools don’t exist, but because it’s hard to see how all the pieces fit together in a coherent, scalable way.

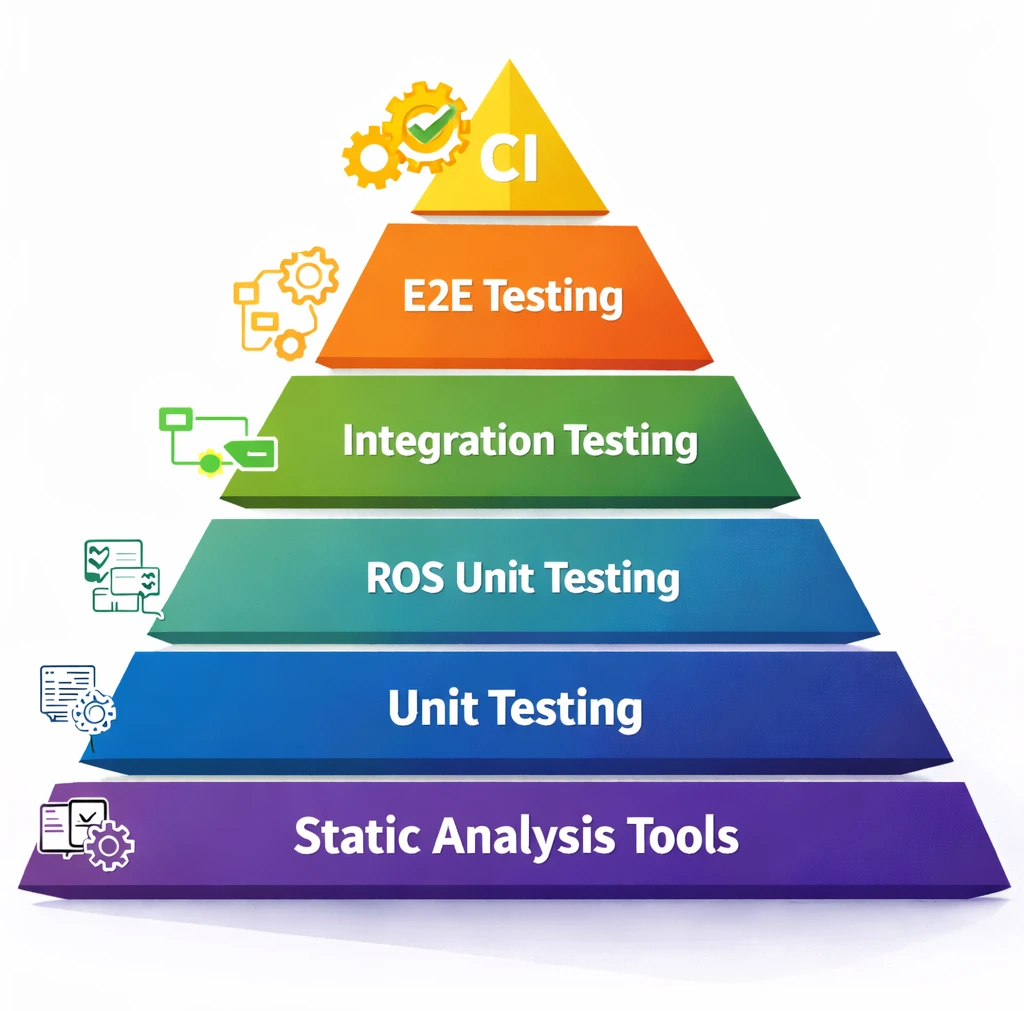

The Testing Pyramid in ROS 2

Testing in robotics is often associated with simulation, bags, or full system validation on a robot. While those approaches are important, relying on them alone is inefficient and risky. Problems are discovered late, debugging is slow, and every change feels dangerous.

In practice, robust ROS 2 projects rely on a layered testing pyramid. Each layer targets a different kind of risk and provides fast feedback at the appropriate scope. The lower layers run often and cheaply; the higher ones are more expensive and used selectively.

Understanding this structure is key to investing effort where it delivers the most value—especially when teams and codebases grow.

The Foundation: Static Analysis and Linters

Before code ever runs, many problems can already be detected.

Formatting inconsistencies, unsafe constructs, missing dependencies, invalid package.xml files, or broken CMake logic are common sources of friction in ROS 2 projects. None of them require a robot—or even a running node—to catch.

Static analysis and linters form the foundation of the pyramid. They are fast, deterministic, and trivial to automate. More importantly, they enforce a shared baseline of quality across the team, removing noise from code reviews and preventing avoidable errors from propagating further.

In professional environments, this layer is non-negotiable. If code fails basic quality checks, it should not progress further in the pipeline. This is where CI starts paying for itself almost immediately.

To get to know more about this, check our previous post.

Validating Core Logic Outside ROS

Once the basics are covered, the next question is simple: does the logic behave correctly?

This layer focuses on validating pure behavior, independent of ROS 2. Algorithms, decision rules, and data transformations are tested using plain types, without publishers, subscribers, executors, or parameters involved.

As discussed in the previous post on testable design, this separation is intentional. Logic that does not depend on middleware is easier to reason about, faster to test, and safer to refactor. These tests provide the tightest feedback loop and should catch the majority of defects early—when they are cheapest to fix.

Just as importantly, this layer actively shapes better architecture. Code that is easy to test in isolation is usually easier to understand, extend, and maintain in production.

Testing ROS 2 Nodes in Isolation

Correct logic alone is not enough. In ROS 2, many failures come from incorrect interfaces rather than incorrect algorithms.

This layer focuses on validating nodes as ROS 2 entities: parameters are declared and propagated correctly, publishers and subscribers are created with the expected names and types, lifecycle transitions behave as intended, and data flows through the node as expected.

These tests still run in isolation, without launching a full multi-node system. That isolation is essential. Without it, tests become flaky, slow, and hard to trust—especially when executed in parallel. Proper domain isolation ensures deterministic behavior and reliable results.

This layer bridges the gap between pure logic validation and system-level behavior.

Verifying Interaction Between Nodes

As systems grow, risk shifts from individual components to their interactions.

Nodes that work perfectly on their own can still fail when combined. Timing assumptions, startup order, implicit contracts, or mismatched expectations often only surface once multiple components are running together.

Integration tests address this by launching small groups of nodes and asserting observable behavior. They verify that communication paths are wired correctly, that nodes react to each other as expected, and that higher-level workflows function as designed.

These tests are slower and more complex than isolated tests, but they cover a class of failures that no amount of unit testing can detect. Their purpose is not to replace lower layers, but to confirm that independently validated components actually work together.

End-to-End Confidence, Used Sparingly

At the top of the pyramid sit end-to-end and system-level tests.

These validate complete execution paths using realistic configurations, recorded data, or simulation. They provide confidence that the system behaves correctly from the outside, often from the same perspective as a user or operator.

They are also the most expensive tests to write, run, and maintain. For that reason, mature teams keep this layer intentionally small, focusing on critical behaviors rather than exhaustive coverage.

When the lower layers are solid, end-to-end tests become a safety net—not the primary debugging tool.

The Missing Piece: Automating Everything with CI

None of this matters if tests are optional.

A professional ROS 2 project wires all these scopes into a single CI pipeline that runs automatically on every pull request. The pipeline becomes the quality gate and the shared source of truth for the team.

A typical flow looks like this:

-

Build the workspace

-

Run static analysis and formatting checks

-

Execute algorithm-level and ROS-aware unit tests

-

Run integration tests

-

Optionally trigger heavier end-to-end checks on schedules or release branches

This pipeline prevents regressions, enforces standards, and removes subjective judgment from code quality decisions. Over time, it also changes team behavior: smaller changes, safer refactors, and fewer surprises late in the development cycle.

A Concrete Example You Can Reuse

All of these ideas are implemented end-to-end in our ROS 2 Testing: A Practical Survival Guide workshop.

The repository includes:

-

A structured testing pyramid applied to real ROS 2 code

-

Examples of testable node design

-

ROS-aware tests with proper isolation

-

Integration tests and CI workflows you can adapt to your own projects

The goal is not to provide a one-size-fits-all solution, but a concrete reference that teams can fork, learn from, and evolve.

👉 Ready to get started?

🔗 Explore the workshop materials and follow them at your own pace.

📩 Contact Ekumen if you want support designing or implementing a testing strategy for your product or team.

📰 Subscribe to our blog or newsletter to keep learning about testing, quality, and real-world robotics engineering.